Context: Robotics in a high-risk area

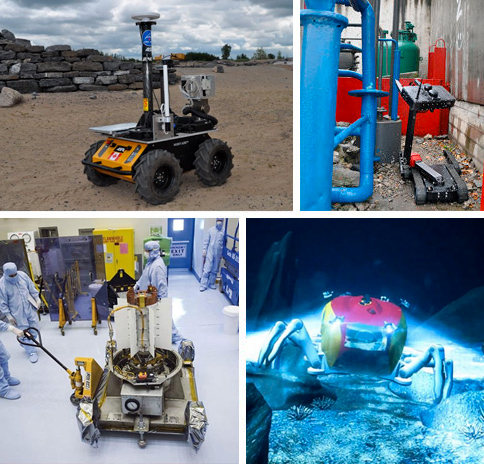

One good example of when robots are needed to replace humans is when it comes to intervening in high-risk areas, for example at the bottom of the sea, in space or in extreme terrain conditions (climate, topography, radioactivity, etc.).

Robots operating in extreme environments

The CEA commissioned Génération Robots to lead a robotics project in a radioactive environment

As a result, we worked with the CEA (French Alternative Energies and Atomic Energy Commission) for several years , helping them to improve the working conditions of employees in the nuclear industry.

In 2015 and 2016, the CEA asked

Génération Robots

to create a behaviour package for

“inspection”, “handling of radioactive materials” and “nuclear incident” type scenarios

.

You can find out more about our Nuclear Humanoid Inspection and Clean-Up Glove Box Robot project in the following document (in French): Nuclear Humanoid Inspection and Clean-Up Glove Box Robot

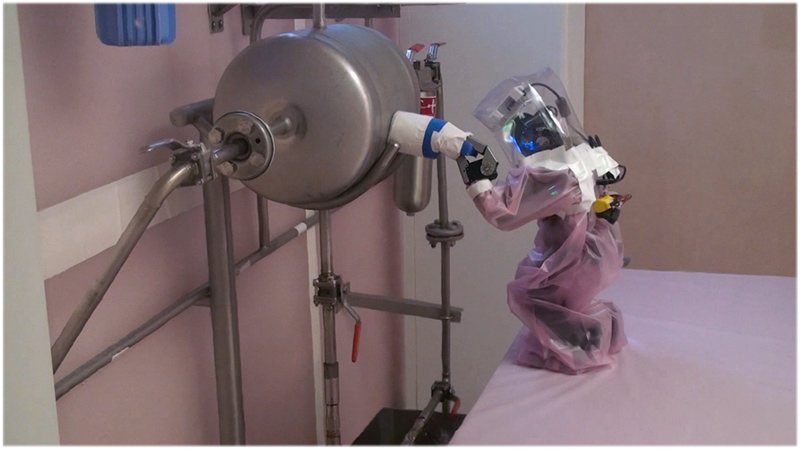

Extract: This research assesses the suitability of using humanoid robots in a nuclear environment. The DARwIn-OP platform was used and modified to enable it to intervene in a nuclear context.

The work consisted in equipping the humanoid with both a radiological sensor and depth-camera arm control.

The tests carried out show that it is possible to take radiological measurements using the integrated sensor and to take smears to assess object contamination.

The Darwin-OP robot taking a smear to assess object contamination

Our robotics engineering department (the GR Lab) had already carried out a similar study involving a robot with legs, the PhantomX hexapod.

The latest CEA/Génération Robots project: Teleoperation of a robotic arm for nuclear decommissioning

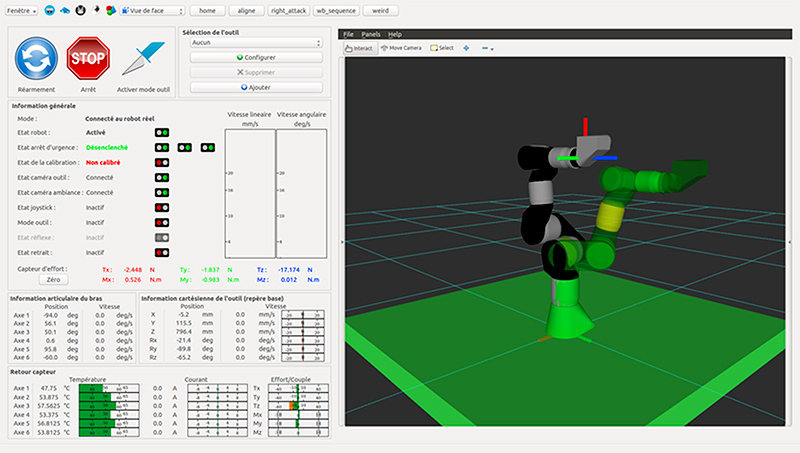

For this new 2017 project, we had to make it possible for a remote operator to perform dismantling operations using a teleoperated robotic arm mounted on a mobile platform.

We were set three objectives for this project, corresponding to three different types of tasks:

- Teleoperation : joint and Cartesian control of the robot arm using a graphical interface and 6D joystick.

- Sequences : recording and playback of complex sequences of operations and trajectories

- Monitoring: video feedback using a tool and ambiance camera, 6D force sensor and 3D model planning

This project was broken down into 2 phases:

- Hardware part (hardware architecture)

- Software part (software architecture)

Robotic arm assembly and customisation (hardware architecture)

Robotic arm for nuclear decommissioning

We used an arm with 6 degrees of freedom , necessary for decommissioning operations (in particular tasks involving use of a circular saw fitted to the end of the arm for cutting operations).

We used Schunk PRL modules to create this arm with 6 degrees of freedom. Why? Because they offer a very high power-to-weight ratio compared to the other robotic arms on the market.

It is controlled using a CAN bus , which is a system that allows the different sensors and actuators on a robotic platform to communicate with each other via integrated electronic boards. This greatly reduces the amount of required cable (essential for an articulated arm to ensure the cables do not get tangled up or pulled off).

To be able to detect obstacles in real time, our team developed a script that calculates the value of the torque exerted on each of the Schunk arm’s joints . If the value is too high, it means the arm has come up against an obstacle.

We installed two cameras on the platform

- A tool camera on the robot arm, including zoom/focus/iris control, necessary for effectively carrying out the required tasks.

- A dome-type ambiance camera , allowing the operator to observe the environment in which the mobile robot is operating. This camera can be fixed either to the ceiling of the room being explored or to the end of a rod mounted on the robot.

An additional force sensor

We installed a force sensor to measure the X/Y/Z forces and MX/MY/MZ moments on the tool.

This allows adjustments to be made to the robotic arm’s movements according to its environment (obstacle, type of material, etc.).

We set up two types of teleoperation:

- Graphical interface (HMI, or Human-Machine Interface): allowing joint and Cartesian control (position/speed)

- 6D joystick (with 6 degrees of freedom, like the robotic arm): for Cartesian teleoperation (to determine the position of a point in space using a system of Cartesian coordinates). We also created a real-time force feedback system (haptic system) , allowing the user to know when the robot is nearing its physical limits, which are pre-defined in the interface.

Lights and safety

The robot must be able to illuminate its environment, so we installed controllable lights on the tool and base.

Our engineers also installed several safety devices , including a heartbeat system to check that the control PC remains constantly connected to the robotic arm. They also added an emergency stop button . The end users added a door contactor to check whether or not the door is open (open or closed).

Creation of the software architecture (software architecture)

ROS middleware

This remotely operated arm, designed for use in a radioactive environment, was developed entirely using ROS middleware , to optimise the system’s modularity and speed up developments by drawing on the existing library ecosystem.

If you want to know more about ROS middleware, which we work with a lot, take a look at our feature article: What is ROS?

Low-level communication

Low-level communication compartmentalises and simplifies data transmission between the main computer and the various components , such as the actuators, LEDs, sensors and other electronic components. We used the ros_canopen protocol to communicate with the robotic arm.

We worked with MoveIt, the standard motion planning library used by ROS , for the inverse kinematics and trajectory planning. It is a library developed by Willow Garage (also responsible for ROS). MoveIt is fully integrated with ROS.

We introduced some changes in order to more effectively plan difficult Cartesian trajectories and to better anticipate and avoid obstacles.

Control interface

We used the PySide library to develop the graphical interface, with ROS communication with all the C++ modules managing the critical aspects. PySide is a free module that allows you to connect the Python language with the Qt library . Qt is an object-oriented API developed in C++ that offers for example graphical interface , data access and network connection components.

-

Sequence recording and playback

: it is possible to record and replay points and trajectories, and to create complex sequences of operations involving use of the tool and the force sensor.

- Reflex mechanisms : to automatically monitor force sensor values and joint torques and trigger pre-configured reflex trajectories (returning to a target time or distance).

Mobile base: Husky robot

We chose the Husky robot as the mobile base for this project, a robotic platform that performs extremely well, even under difficult conditions. Equipped with a powerful four-wheel drive chassis, it can be rapidly customised and can move over rough terrain in extreme conditions (IP44 protection rating).

Our GR Lab engineers specialise in developing innovative, customised robotics and artificial intelligence solutions for professionals, like the robot created for EDF.

If you have an idea for a project, feel free to contact us so we can help you bring it to life. Our service and software will make the difference!

Contact us at contact@generationrobots.com or by phone at 05 56 39 37 05 .